What is a data center switch?

A data center switch is a high-performance switching device specifically designed for data center networks. It offers higher bandwidth, lower latency, and greater scalability than traditional enterprise-class switches. The main role of a data center switch is to connect servers, storage devices and other network devices so that they can communicate and share data with each other.

What does a data center switch do (Main function)?

- High-speed data transmission

A data center switch has high-speed data transmission capability to support the needs of large-scale data center network. It can provide high-bandwidth connections to ensure fast, real-time data transmission and response.

Data Distribution and Routing: The data center switch is able to accurately distribute data packets to target devices according to specific network rules and routing policies. It can choose the fastest path for routing according to the source and destination of the packets.

- Virtualization support

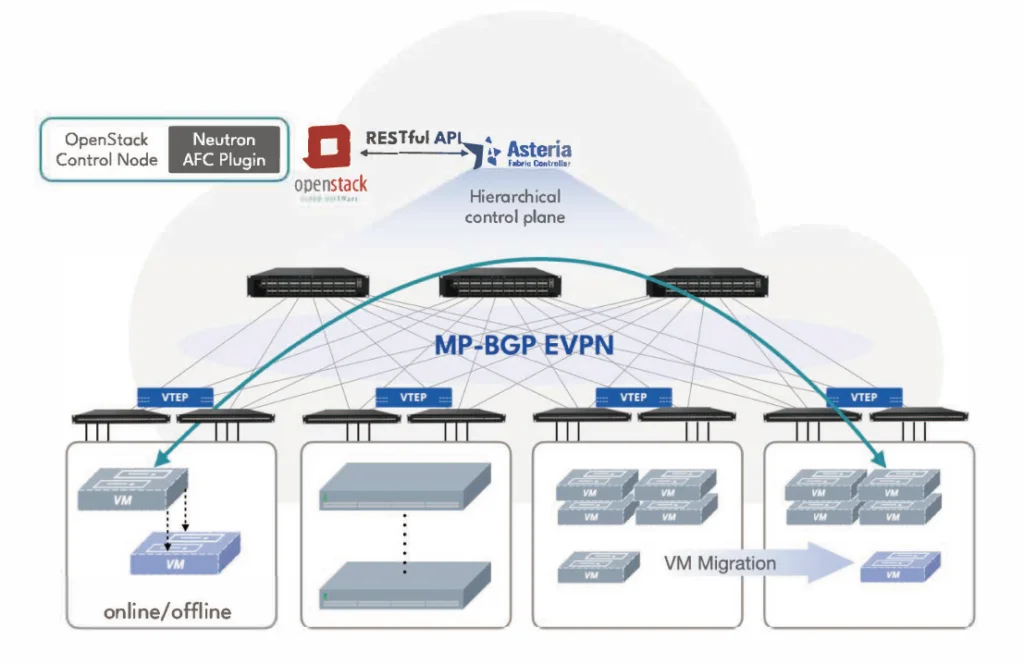

Data center switches are able to support virtualization technology, which enables isolation and management between different virtual networks through features such as virtual LAN (VLAN) and virtual router (VRF). This helps improve network performance and security, and simplifies network management and configuration.

- Load BalancingData center switches enable load balancing to evenly distribute network traffic to different servers and devices. This helps optimize server resource utilization and improve system performance and reliability.

- Switch Aggregation

Data center switches support switch aggregation technology, which creates a high-bandwidth link by binding multiple physical or logical ports together. This provides greater data throughput and redundancy protection to ensure the reliability and availability of the data center network.

Application Scenarios

- Build a high-performance, flexible and scalable cloud data center network

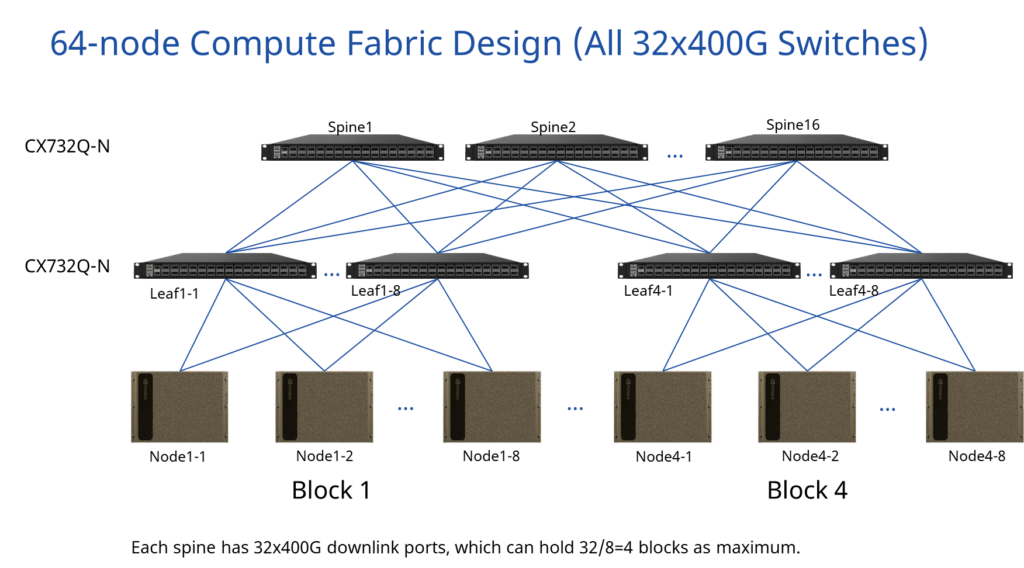

In cloud data center networking, CX732Q-N/CX564P-N and CX532P-N/CX308P-48Y-N can be used to build a Spine-Leaf flat network, which is interconnected by 40GE/100GE ports, and adopts data center protocols such as VXLAN to form a non-blocking large Layer 2 network, which ensures the wide-scale migration of virtual machines and flexible deployment of user services. Flexible deployment greatly simplifies the complexity of cloud network infrastructure, and at the same time, it has strong horizontal expansion capability, greatly reduces the TCO (Total Cost of Ownership) of the cloud network, and makes the basic concept of cloud computing Pay-as-you-grow manifested in the cloud network.

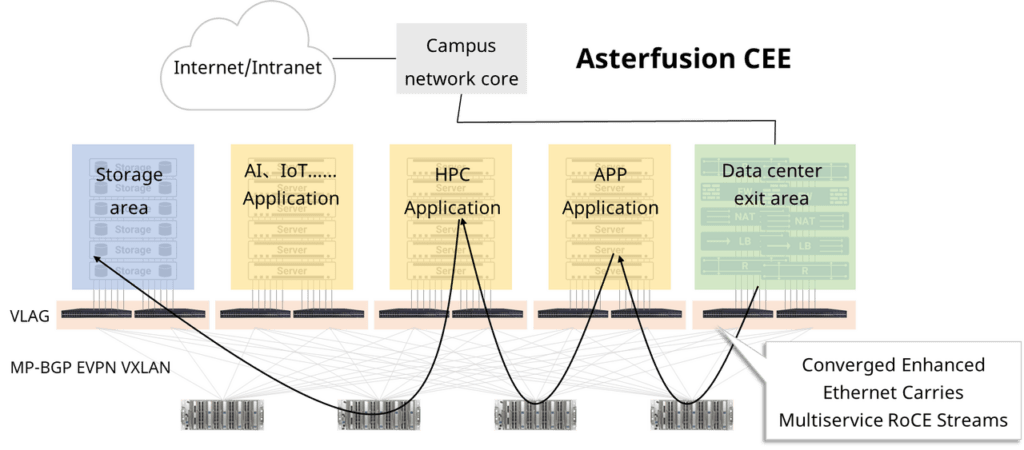

- Constructing Converged Enhanced Ethernet for Multi-service Carrying

In order to meet the demand for low-latency, high-bandwidth networks for high-performance computing (HPC), distributed storage and other services, it is often necessary to deploy a separate set of IB networks, which not only has a high construction cost, but also has higher technical requirements for engineers, and users need to pay additional hardware and operating costs compared with Ethernet networks.

CX-N series cloud switches adopt standard Ethernet protocols and open hardware and software technologies that CX-N series cloud switches adopt standard Ethernet protocols and open hardware and software technologies, and support lossless Ethernet technology.With low latency, high bandwidth, and rich data center features, CX-N series cloud switches can build converged and enhanced Ethernet CEEs for users, which not only help users simplify network complexity and reduce the difficulty of operation and maintenance, but also help users effectively reduce the TCO, while achieving higher ROI.

What is the purpose of a data center switch(Key feature)?

Data center switches play a key role in data center architecture with the following key features:

- Reliability and high availability

The data center switch provides redundancy and fault tolerance mechanisms to ensure high availability of the data center network. By adopting technologies such as dual-machine hot backup and link aggregation, it is able to realize failover and seamless fault-tolerance mechanism to ensure stable operation of the system.

- Network security

Data center switches are equipped with rich security functions, such as access control lists (ACLs), virtual private networks (VPNs), firewalls, etc. These security mechanisms can protect data. These security mechanisms can protect data and ensure the security and privacy of sensitive information.

- Scalability and Flexibility

Data center switches provide flexible scalability to support growing data center network requirements. Organizations and businesses can increase or expand the number of switches and bandwidth as needed to accommodate changes in size and demand.

- Performance Optimization

A data center switch optimizes the performance of the data center network by providing high bandwidth and low-latency data transmission capabilities. It can quickly handle large amounts of data traffic, ensuring fast data transmission and response, and improving the overall performance and efficiency of the system.

- Management and Monitoring

The data center switch provides powerful management and monitoring functions that allow real-time monitoring and management of network traffic, device status and performance. Through the centralized management interface, administrators can configure, troubleshoot and optimize the performance of the switch, improving the management efficiency and maintainability of the network.

What is the typical data center network architecture?

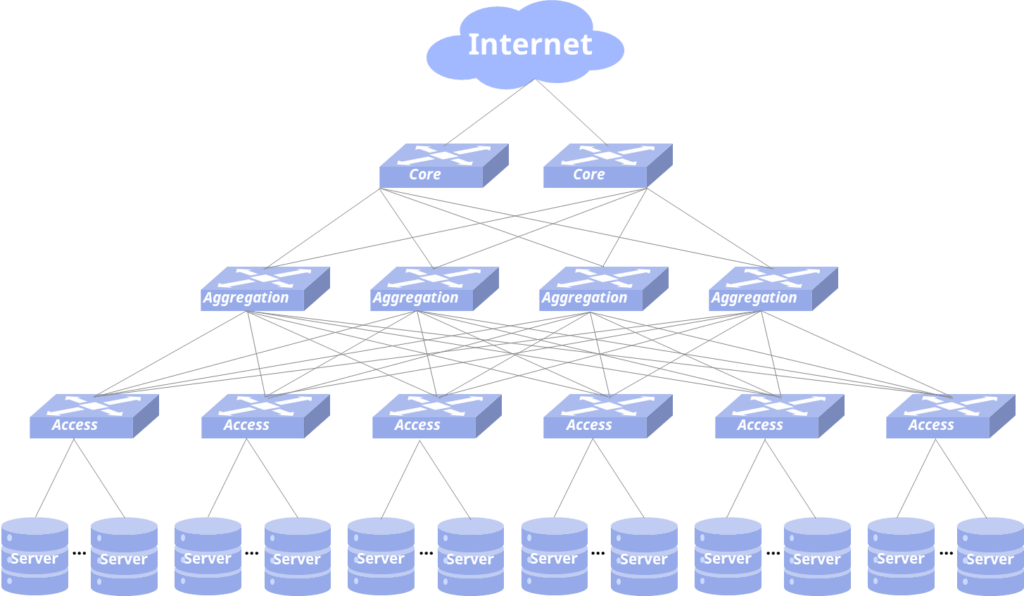

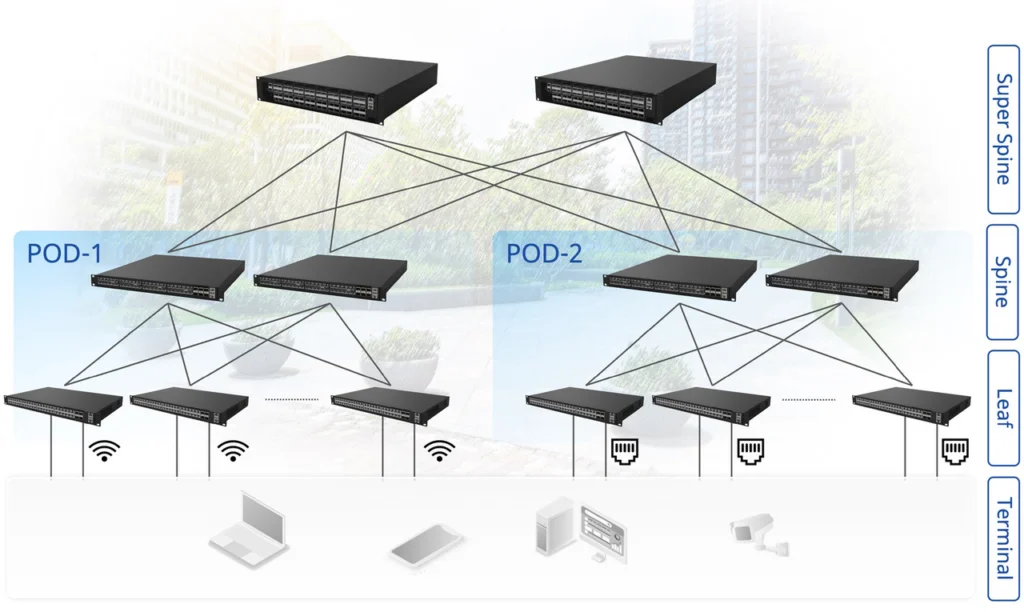

Hierarchical Network

A hierarchical network is defined as a tree-like aggregation system with multiple inflow points (sources) and one outlet (sink).

- Access Layer: Access switches are usually located at the top of the rack, so they are also called ToR (Top of Rack) switches, and physically connect to servers.

- Aggregation Layer: The aggregation switch connects the access switches under the same Layer 2 network (VLAN) and provides other services, such as firewall, SSL offload, intrusion detection, network analysis, etc. It can be a Layer 2 switch or a Layer 3 switch.

- Core Layer: The core switch provides high-speed forwarding of packets to and from the data center, and provides connectivity for multiple Layer 2 local area networks (VLANs). The core switch usually provides a resilient Layer 3 network for the entire network.

Because the traditional three-tier network aggregation layer and the access layer usually run between the STP protocol, prone to failure, affecting the entire VLAN network, forming a waste of bandwidth; and for modern cloud data centers frequently occurring virtual machine migration and other “east-west traffic” towards the difficult to carry, it is difficult to adapt to the mega network, so in order to carry more and more “east-west traffic”, the data center network architecture began to develop towards a flatter two-tier architecture. To carry more and more “east-west traffic”, the data center network architecture began to move towards a flatter two-tier architecture, and the leaf-ridge network architecture began to be widely used.

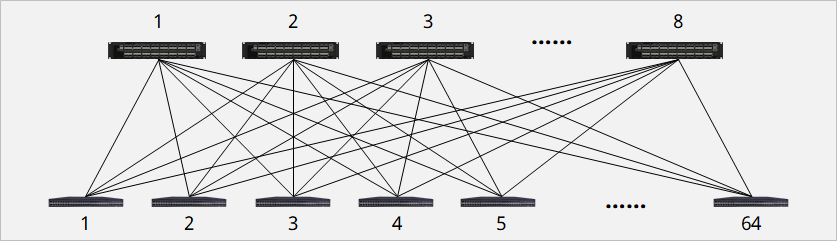

Spine-Leaf Network

The Spine-Leaf topology network structure has the advantages of high reliability, high performance and scalability, and is favored by many large data centers and cloud computing networks.Below is Asterfusion AI Network Proposal.

A Spine-Leaf topology network consists of upper-layer ridge switches and lower-layer leaf switches, where the ridge switches act as aggregation switches and the leaf switches act as access switches. In a Spine-Leaf topology, all leaf switches are connected to each ridge switch, so that data transmission between any server and another server only needs to pass through one leaf switch and one ridge switch, which greatly improves the efficiency of data transmission, which is especially important in high-performance computing cluster applications.

The Spine-Leaf network dynamically selects multiple paths for communication load balancing through ECMP, which greatly increases efficiency by better utilizing multiple links for traffic transmission compared to the STP protocol used on L2 networks. The Leaf Ridge network architecture reduces the performance requirements for switches and allows for smooth upgrades through the addition of ridge switches.

Data center switch deployment and management

Pay attention to the following points when deploying data center switches:

- Rack installation and cabling planning

- Switch configuration and optimization

- Monitoring and troubleshooting

Click on the link to download the configuration manual: Documentation

What is the difference between enterprise switch and data center switch?

How to choose the most suitable data center switch?

There are several key factors to consider when choosing a suitable data center switch:

- Performance requirements

- Choose sufficiently high forwarding capacity and port density based on existing and future bandwidth requirements

- Ensure that the switch can support the required line-speed forwarding capacity and low latency

- Scalability

- Choose modular switches that support multiple high-speed interfaces (such as 10GbE, 25GbE, 40GbE, 100GbE)

- Ensure that the switch can flexibly expand the number of ports through stacking or modularization

- Reliability and high availability

- Choose high-reliability switches with redundant power supplies, fans, etc.

- Support high availability functions such as fast failover and self-healing

- Network function support

- Choose switches that support virtualized network protocols such as VXLAN and EVPN

- Ensure that the switch provides rich QoS, security and monitoring functions

- Management and automation

- Choose switches that support SDN control, automated configuration and other functions

- Ensure that the switch can be seamlessly integrated with existing network management tools

- Energy efficiency and cost

- Choose switches with low power consumption design to reduce operating costs

- Consider the integrated functions of the switch, such as firewall, load balancing, etc., to reduce hardware costs.

In addition, you should choose the appropriate manufacturer and model according to the specific application scenarios and future development needs of the data center. By comprehensively evaluating the above factors, you can choose the data center switch that best suits your needs.

In the age of AIGC and cloud computing, Asterfusion provides turnkey SONiC and ultra-low-latency solutions to enterprises and service providers with its self-developed data center switches. These data center switches have the following features:

- Ultra Low Latency

Compact fix unit with high port density, port-to-port Layer 2/Layer 3 forwarding latency as low as ~400ns.

- Enterprise Ready SONiC NOS

Rich Layer2/Layer3 & RDMA network features, such as MC-LAG, EVPN, VXLAN, DCB, RoCEv2, PFC, QCN…

- Automated O&M

ZTP, ONIE, Ansible and Python to support automated installation, operation & maintenance.

Other questions:

Data center switches are switches designed for large data center environments. Data centers are used to store and manage large numbers of computers and servers and process massive amounts of data, providing high-performance computing and cloud services.

Enterprise Switches are switches used in small network environments such as campuses or office buildings. They primarily connect office equipment such as computers, phones, and printers.

Below is a table comparing data center switches and traditional enterprise switches:

| Features | Data Center Switches | Enterprise Switches |

| Network Size | Large Data Center | Small office buildings, campuses |

| Number of Ports | Large number of ports | Fewer ports |

| Performance Requirements | High performance, low latency | Moderate performance, lower latency |

| Scalability | Highly scalable | Moderate scalability |

| Reliability and Redundancy | High reliability and redundancy | Basic reliability and redundancy |

| Network Management Features | Complex network management capabilities | Simplified network management features |

| Applicable Scenarios | Large data centers, enterprise networks | Office buildings, campuses, small business networks |

What are the main components of a data center networking?

The main components of a data center networking infrastructure typically include:

- Core Switches: Also known as the backbone or aggregation layer, these are high-performance, high-capacity switches that connect the various parts of the data center network. They provide the primary routing and forwarding functionalities.

- Spine Switches: These are the upper-tier switches that connect the core layer to the leaf/access layer. They provide high-bandwidth, low-latency connectivity between different parts of the network.

- Leaf/Access Switches: These are the lower-tier switches that connect servers, storage, and other network devices directly to the data center network. They provide access layer connectivity and often incorporate features like Power over Ethernet (PoE).

- Server/Hypervisor Networking: This includes the network interface cards (NICs), virtual switches, and virtual routing/forwarding functionality within server hypervisors. They enable connectivity between virtual machines and the physical network.

- Storage Networking: Storage area network (SAN) switches, storage network interfaces, and protocols like Fibre Channel, iSCSI, and NVMe over Fabric enable high-speed, low-latency access to shared storage resources.

- Network Services: Components like load balancers, firewalls, intrusion detection/prevention systems, and network monitoring/management tools provide essential network services and security.

- Cabling and Connectivity: High-speed ethernet cables, fiber optic links, and various port types (e.g., Ethernet, Fibre Channel, InfiniBand) interconnect the different networking components.

- Network Operating Systems: Specialized software like Cisco NX-OS, Arista EOS, or open-source platforms like Cumulus Linux provide the underlying control plane and management functionalities for the network.

The specific design and configuration of these components depend on the size, requirements, and architecture of the data center network.

Why do we require FC or SAN switch in a data center?

Data centers often require Fibre Channel (FC) or Storage Area Network (SAN) switches in addition to Ethernet switches for the following key reasons:

- Storage Connectivity:

- FC and SAN switches provide high-speed, low-latency connectivity between servers and storage arrays, optimized for storage traffic.

- They enable the creation of a dedicated, high-performance storage network separate from the general-purpose Ethernet network.

- Storage Protocols Support:

- FC and SAN switches support storage-specific protocols like Fibre Channel and iSCSI, which are widely used for connecting servers to storage systems.

- These protocols are optimized for reliable and efficient block-level data transfer required for enterprise storage applications.

- Scalability and Performance:

- FC and SAN switches can scale to support large numbers of storage devices and clients, with high bandwidth and low latency.

- They provide features like zoning, LUN masking, and logical unit management to ensure proper isolation and access control for storage resources.

- High Availability:

- FC and SAN switches often have redundant components, such as power supplies and control modules, to provide high availability for the storage network.

- They support features like port channeling and failover mechanisms to ensure continuous access to storage in the event of component failures.

- Storage Virtualization:

- FC and SAN switches integrate with storage virtualization technologies, such as storage area networks (SANs) and network-attached storage (NAS).

- They enable dynamic provisioning, migration, and management of storage resources across the data center.

By utilizing dedicated FC or SAN switches, data centers can ensure reliable, high-performance, and scalable connectivity between servers and storage systems, which is essential for mission-critical applications that require fast and secure access to data.

What is a switch on a server

In a server environment, the network switch is typically located within the server chassis or as a separate top-of-rack (ToR) switch serving a group of servers. It provides the necessary network connectivity and enables servers to communicate with each other and access shared resources, such as storage, applications, and cloud services.

The specific type and configuration of the switch used on a server depend on factors like the server’s network requirements, data center architecture, and the overall networking strategy.

A network switch on a server has the following key functions:

- Network Connectivity:

- The switch provides multiple Ethernet ports, allowing the server to connect to the local area network (LAN) or storage area network (SAN).

- It enables the server to communicate with other devices, such as other servers, storage systems, routers, and network services.

- Traffic Forwarding:

- The switch forwards data packets between the connected devices based on their destination MAC addresses.

- It operates at the data link layer (Layer 2) of the OSI model, making forwarding decisions quickly and efficiently.

- Collision Domain Separation:

- Switches create separate collision domains for each port, improving network performance and efficiency compared to hubs.

- This allows multiple devices to communicate simultaneously without collisions.

- VLAN Support:

- Switches can implement virtual LANs (VLANs) to logically segment the network and control broadcast domains.

- This allows for better security, traffic isolation, and efficient use of network resources.

- Port Features:

- Switches may offer advanced port-level features, such as link aggregation, port mirroring, and quality of service (QoS) configuration.

- These features enable enhanced network management, monitoring, and optimization capabilities.

What is the difference between ToR and EOR and Mor?

ToR (Top of Rack), EoR (End of Row), and MoR (Middle of Row) are three cabling architectures commonly used in data center network design. The main difference between them is the physical location of the switches and servers and the cabling method.

- ToR (Top of Rack):

在 ToR 架构中,一个或多个网络交换机安装在每个机架的顶部。服务器通过短距离铜缆或光纤电缆直接连接到该机架顶部的交换机。该架构的优点是布线简单、减少水平布线长度、降低时延、易于管理和维护。但如果数据中心规模较大,可能需要大量的交换机,增加了管理和配置的复杂度。

- EoR(行尾):

在EoR架构中,网络交换机集中安装在数据中心一排机架的末端。服务器通过较长的铜缆或光纤电缆连接到位于机架行末端的交换机。这种架构的优点是交换机较少,管理和配置相对简单,更容易扩展。然而,对长水平布线的需求会增加布线成本和潜在的信号衰减问题。

- MoR(行中间):

MoR架构是ToR和EoR之间的折衷方案,交换机位于一排机架的中间。这种设计通过减少水平布线的长度同时保持合理的交换机数量,结合了 ToR 和 EoR 的优点。MoR 架构在某些情况下提供了更好的灵活性和可扩展性,但可能需要更复杂的布线规划。

总体而言,ToR、EoR 和 MoR 之间的选择取决于数据中心的规模、预算、管理和扩展需求。每种架构都有特定的优点和局限性,需要根据具体情况进行选择。

参考:

内森·法林顿;埃里克·鲁鲍; Amin Vahdat“商用硅时代的数据中心交换机架构”

乔尔迪·佩雷洛;萨尔瓦多·斯帕达罗;塞尔吉奥·里卡迪;达维德·卡雷利奥; Shuping Peng“基于全光分组/电路交换的数据中心网络,可增强可扩展性、延迟和吞吐量”