You are here:

We hired a professional artist for this article. He didn’t show up.

And, whether you think this is convenient or not, or have absolutely idea what this means, that’s not changing any time soon. So (particularly if you don’t know about it, because if you do, we don’t have to tell you) buckle up, things are about to get weird!

Monkeys in fancy suits.

Our mind is outstanding and figuring out what to expect and what not to expect, or so we think. This is because, in the natural world (where our brains were born and developed), things are relatively physical and predictable. So, if you guess, you’ll probably be right when predicting physical things such as the (average) amount of rain to fall in your hometown this year, the (average) height of a random person on the street, and the (average) amount of babies your pregnant ex-wife is carrying.

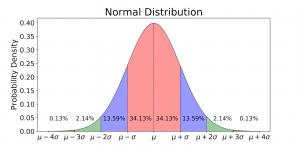

You could also adequately represent these things (called “normal” for a reason) on a normal or Gauss distribution such as the following one:

No fancy artists for this one, just good ole’ Google.

The normal distribution is the boring, old, mundane world. The world where super-rare things are equally positive and negative, but always extremely rare. They are, well, rare. And so our brains evolved to predict (almost always) accurately what happens in this domain. Most people you meet will be around 1.60-1.75 meters tall. A pregnant woman is, almost always, carrying a maximum of two babies. And, of course, your hometown shouldn’t experience torrential rain every single day of the year.

So, where do we go wrong?

Things don’t always scale (and “experts” don’t seem to get it).

However, although our brains are moderately good at predicting mundane things, they fail to understand that human/big/complex subjects don’t behave like natural, primitive things. We love to plan, think we’re brilliant, and forecast the future, although we’re almost always wrong. Even worse, we beat ourselves up when we don’t have a roadmap, although roadmaps rarely translate into the real world.

And this is far from being a lay-person issue.

Expertise is hot shit. As a society, we love to give awards and shine light upon experts who pat themselves on the back when thing behave in an average way (and they get to yell ‘I told you so!’), celebrate when rare positive events happen, and give us the old ‘there was no way to predict this’, when they go terribly wrong and they didn’t have a plan B.

‘Experts’ and statistics both celebrate the unexpected when it goes their way and wash their hands on its consequences when it goes wrong.

And, as we’ll see, when things aren’t mundane, things go wrong more often than expected.

Fat-tailed craziness

Remember that we told you that crazy things were coming up, back when you were standing in the chart? Well, the time has come to uncover the craziness.

As it turns out, you weren’t standing in a normal distribution. You were standing in a ‘fat-tailed’ distribution, which might just be the same thing to the naked eye.

Pretty surprising once you figure out the difference, huh?

And so, just as you might mistake a normal for a fat-tailed distribution before knowing the difference, our ‘experts’ and everyone else do the same thing. We tend to mistake fat-tailed situations for normal distribution events.

Why fat tails make more sense when representing complex systems.

For the sake of simplicity, let’s just say that complex systems (those with human elements, planning, and a scale greater than what’s possible in the ‘natural’ world) are always fat-tailed. This is because of the variability and fragility caused by human, technologic, or ‘expert’ intervention.

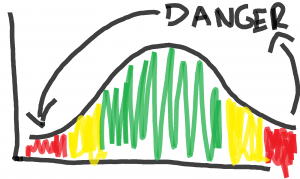

And so, wherein a normal distribution rare events are confined to the very end of our chart, fat-tailed systems, while maintaining an average, turn out far more volatile, with greater probability for extreme results.

The red ‘dangerous’ areas don’t seem that small anymore, and that’s both very bad and very good. ‘

And so, out of extreme volatility, we get both extreme goodness and badness. Which could be tremendously helpful if we, somehow, manage to cap our downside.

You can never be fully insured, but you can choose how to play your cards.

The point of this article is not to say that, since we live in a complex world, everything will eventually turn out wrong. It is to assure you that, while planning could be a fun activity at times, you shouldn’t feel bad for not having a plan and making things as you go along.

In fact, it might just be the wisest thing you can do.

We’ve talked before about how to use systems that benefit from failure in business environments. Now, to close this article, we’d like to share one last message:

Taking smart risks is actually safer.

You never know how things will turn out, so your best bet is to play the long game and create positive momentum from all things possible. You can (and you should!) insure yourself up to the point that it’s reasonable, not to the point where it handicaps you. For it is the (smart) risk-takers, in the end, that get to take home the sweetest fruit in the tree. And it’s not ever the low-hanging one.